Introduction: The Leap Motion Assignment

This was a research exercise I did for Leap Motion in October 2014 to discuss the challenges of gesture technology in its integration with VR headsets. For the purposes of this assignment I used an Oculus Rift. The goal is to see if an outside UI/UX designer could identify the limitation of the device and work within them to make a great experience for users. The UI designers looking to branch into gesture technology will be pioneers in the field so this is great opportunity for me. What made me qualified for the roll was that I have been working with integrating gesture technology for the past 2 years starting with my experimentation with the Leap Motion controller and the Oblong gesture device. Also my mentor, Lucio Campanelli worked on the Cammono Head Tracking System on a collaboration with Oblong Technologies and iMotion and felt like we were the only ones qualified for this task.

Feedback on Leap Motion's out-of-box-experience

Opening Leap motion out of the box was fantastic. Installed the drivers on both my Mac and PC. I couldn't ask for a simpler set up to installation and then immediately using stuff on the Leap Motion App and getting new Apps at the landing site. The games were fun.

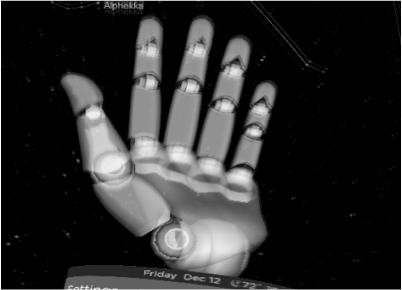

The splash demo was exciting. The only thing I can mention with the tracking of the device was that recognition of the front and back of the hands would get messed up. Another issue is when my hands were making complex gestures or curling in my finger. The recognition could get confused as it recreated my expression in 3d. Another thing that really made this device cumbersome was that the controller has a very specific field of view and distance. Reaching for things that stretched the limit of the device became very difficult. It was also really easy for my hand to go beyond the range of the sensor plate. Backing away from the controller didn't seem to help and in fact it, made it worse. Maybe magnifying the sensitivity like on a mouse would prevent the need for me to make a wide reach for something with my hands.

UPDATE 11/2015: I was ensured by the Leap Motion Team that the V3 tracking update would be a far better improvement.

The VR Planetarium experience was and the 3d UI was fantastic. I had no problem with adjusting the settings. I apologize it took so long to review this aspect of the program. Installing Unity, the developer kits, and tools was no problem for me but hunting down an Oculus was difficult. Luckily I worked with a Unity developer at Nexon who made the move to Oculus.

The splash demo was exciting. The only thing I can mention with the tracking of the device was that recognition of the front and back of the hands would get messed up. Another issue is when my hands were making complex gestures or curling in my finger. The recognition could get confused as it recreated my expression in 3d. Another thing that really made this device cumbersome was that the controller has a very specific field of view and distance. Reaching for things that stretched the limit of the device became very difficult. It was also really easy for my hand to go beyond the range of the sensor plate. Backing away from the controller didn't seem to help and in fact it, made it worse. Maybe magnifying the sensitivity like on a mouse would prevent the need for me to make a wide reach for something with my hands.

UPDATE 11/2015: I was ensured by the Leap Motion Team that the V3 tracking update would be a far better improvement.

The VR Planetarium experience was and the 3d UI was fantastic. I had no problem with adjusting the settings. I apologize it took so long to review this aspect of the program. Installing Unity, the developer kits, and tools was no problem for me but hunting down an Oculus was difficult. Luckily I worked with a Unity developer at Nexon who made the move to Oculus.

|

I think mounting the Leap Motion device and Oculus is a smart move. I didn't have it set up like this and had range issues. I would imagine that having the device mounted would be better because as long as my hands were in front of my face, the device would pick it up. In my experience from the Kinect, it would allow me to reach as far as I wanted being that the sensor eye was set up in front of a screen that was 8-15 ft away.

|

Challenges in VR UI design

Always need to see a representation of the hand.

The first challenge in VR UI design is knowing where the hand is. Think of it like a cursor for the mouse. As a representation of the hand needs to reflect what the user is doing, it most not obscure the UI around it.

Size of Assets (buttons, widgets, 3d elements) effect speed and accuracy.

I really like this portion of the splash demo. Even with the flower pedals being so small, the ability to grab individual ones in such close proximity really shows up the sensitivity and fine range of motion works.

The buttons that are larger and on the settings portion was fast and responsive. However, lets look at the the dials. Dragging up and down isn't a problem. Fine control when the text is packed is.

Setting the day and time on a dial is cumbersome. The size of the text and proximity on the dial makes it hard to control.

Placement of UI in a 360 environment

This is always a challenge even if the space was in 2d. Dragging the UI can be done by pulling the surround UI around you but also having a VR headset allows you to physically turn and look.

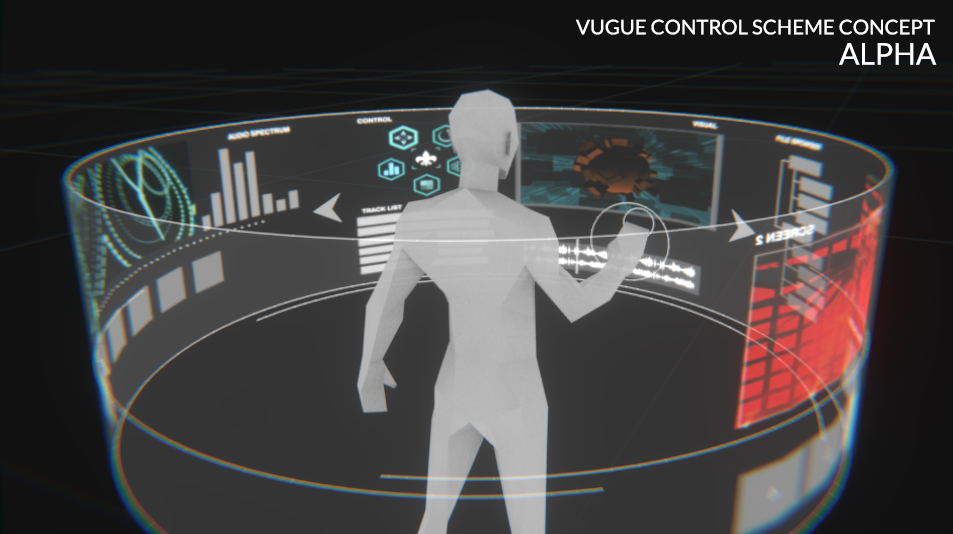

3 use cases for VR/AR which include Leap Motion as the control method

1. Gesture based consumption of media

I have been dreaming up stages to making the world addicted to gesture technology. The first case I can think of is the use of a media box that connects to a TV such as Apple TV and Chromecast. I can speculate that people who spend money a device like this on the market for less than 100 dollars would want to buy a gesture controlled one for around 50 dollars more. I think it would be wise to made a device similar to these products or even partner with these devices for one that as a gesture based control. People wouldn't mind spending more for a more advanced version that didn't need a remote. As I did more research, I found that in fact Samsung is already planning to release such a device. However, I understand Leap Motion is looking to expand into the VR headset world. So I expanded my search.

As I did further research , I found out that Oculus is looking to market itself for viewing movies and give users that large screen experience and in 3d from a headset.

John Carmack, the CTO of Oculus, and infamous IDtech engine programmer is working to develop an Oculus based Netflix App. It is my belief that John Carmack will not have keyboard and mouse control for Oculus. If I were in control of Oculus at the moment, I would be looking acquire Leap Motion or Kinect as the standard technology to integrate into all future Oculus models. I have a feelings that perhaps Leap Motion will want be acquired and I have no problem putting together the storyboard proposals for that. The alternative is a partnership or deal with many VR headset manufacturers looking to integrate Leap Motion technology into VR headsets ranging from Samsung, Google, ect.

2. Videogames (more or less and expansion of media consumption)

|

The VR headset and using gesture based controls would be great for games utilizing powers. It can simulate the use of telekinesis and give the game player an experience that is more rewarding then using a controller. Immediately, Bioshock comes to mind.

Carmack himself has been at the forefront at pushing in game UI controls. He has nice features like this all his titles starting since D00m 3, Quake 4, Rage, ext. Here we have an in game control panel and the the aiming aspect of the mouse controls the cursor. Exploiting this aspect would probably impress him.

|

3. Drone flight controls

This is something I think would be fun for the drone market. Seems VR headsets go hand in hand with drone piloting. Why not add gesture controls to the Heads Up Display.

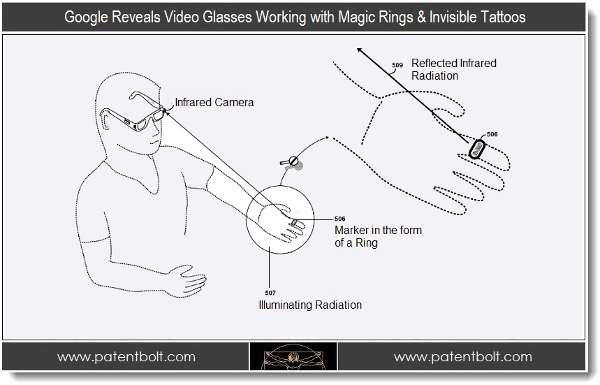

4. Google Glass and other augmented reality

|

Currently this is what google glass has to offer. I personally don't want to wear this ridiculous piece of tech if its its an over glorified slide projector. I have used one of these and its very simple and limited in what it offers. I understand its Gen I tech.

|

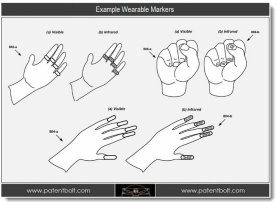

To offer the same granularity that Leap Motion could offer, they would need 5 rings or worse, are those fingernail extensions? I would seriously consider making a proposal to Google that would prevent this.

This is their solution, an RFID controlled tracking ring. So not only does the user have to wear this device on their face but also wear a ring. To me this kind of experience is something a prototype would exist as. Not a finished consumer solution.

|

This is something I feel like I can pitch to google. Imagine waking up and picking up your phone on the night stand to check the news vs putting on google glasses. Its not that I believe gestures are everything. The most obvious thing to do in augmented reality situations is to have stuff automated. Just have having automated recognition and bringing up information is important.

|

Hand gestures are important. Its better than google glass's side scrolling feature. Being able to miniaturize Leap Motion tech into google glass would be way the go.

|

Now not only can you look into your fridge and get automated analysis of your inventory but your augmented glasses will be able to see what you grab with Leap Motion which offers more granularity and fine control of information thrown at you. For example picking up meat would give you recipes and when you bought it rather than flooding you as the user with everything in the fridge.

|

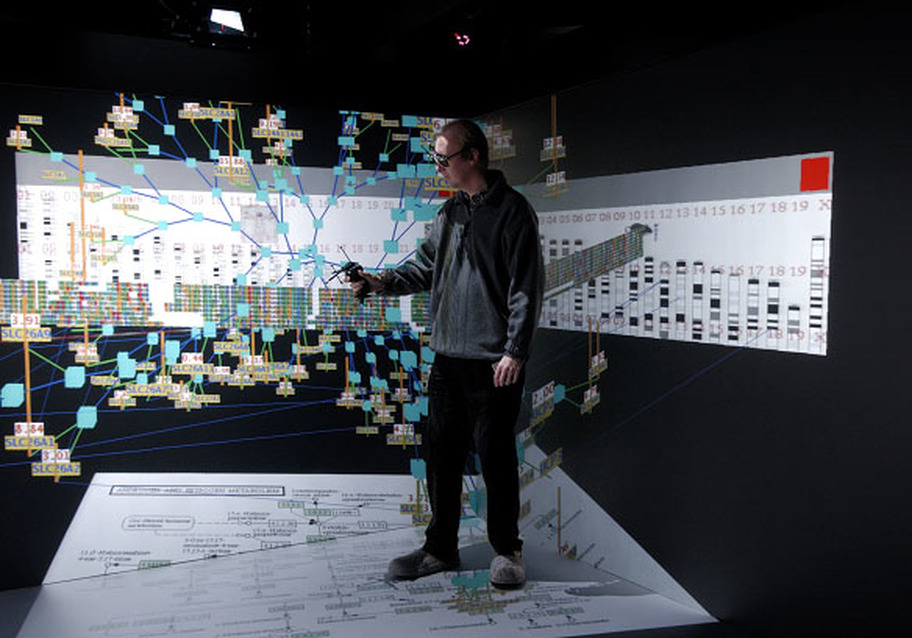

5. 3D Datavisualization

Using VR technology, we monitor and look at data in a 3d space.